Recent commentary has sounded the alarm on the ability of so-called “emerging” technologies to tilt the balance of terror and complicate strategic stability calculations. Indeed, from the longbow and gunpowder to the tank and nuclear weapons, technological innovation has revolutionized and redrawn the borders of the battlefield. The same will be true of the range of new capabilities on the horizon, not least those in the space and cyber domains. But how are these new technologies distinguishable from that which came before them—in qualitative and quantitative terms? And how can we measure the impact of these technologies in a way that is not alarmist, but rather, allows us to systematically evaluate them on their potential for disruption?

Comparing the impacts of heterogenous technologies side by side (especially across two domains) can be challenging; it is the policy equivalent of comparing apples with oranges. This becomes especially apparent when we consider the numerous and wide-ranging ways in which emerging technologies have the potential to complicate strategic stability. They could, for example, provide new ways to use or stop the use of nuclear weapons (e.g., AI for missile defense could significantly change the deterrence calculation). They might blur the boundary between nuclear and non-nuclear infrastructure (e.g., the dual-use nature of satellites might increase the potential for misidentification or unintended escalation). They could create new vulnerabilities within existing systems (e.g., new technologies might enable cyberattacks on civilian infrastructure). And more fundamentally, they might even change the game (i.e., the technology, and its proliferation to new actors, could make attribution more difficult).

However, it is crucial to note that we have always had to contend with new technologies, and it is too easy to forget what was considered “emerging” and when. As a result, it is important to frame a discussion about the impacts of emerging technologies upon strategic stability as exhibiting elements of both continuity and change. Continuity does not mean that nothing changes, but rather, it helps to sober overblown claims that every emerging technology seemingly represents a revolution in military affairs.

With that in mind, perhaps it is possible to develop a measured and systematic means of forecasting the actual impact of individual technological capabilities.

STREAM

The Systematic Technology Reconnaissance, Evaluation, and Adoption Methodology (STREAM), developed by RAND, facilitates an assessment of the potential relative impacts of new technologies. The STREAM approach was designed to assess current and future technologies according to a range of impact and implementation criteria. STREAM culminates in a scoring exercise, wherein subject-matter experts score technologies in the cyber and space domains on the impact that they might have on strategic stability, as well as any barriers to their implementation, out to a ten-year horizon.

The findings presented in the following section are based on research that focused on the UK and its allies. They operate on the assumption that there are five major factors that dictate whether a given technology will impact strategic stability within the next ten years:

- Current technological maturity

- Rate of development

- Capability increase

- Ease of countering

- Crisis instability

While this is by no means a definitive list, together these metrics produce a model that is not too heavily reliant on any single definition of strategic stability and that is modular (i.e., it can be arranged in different ways to reflect different definitions for strategic stability).

Research Findings

The STREAM scoring exercise generated a substantial amount of qualitative and quantitative data. In the interest of brevity, I will focus here on the most high-level findings.

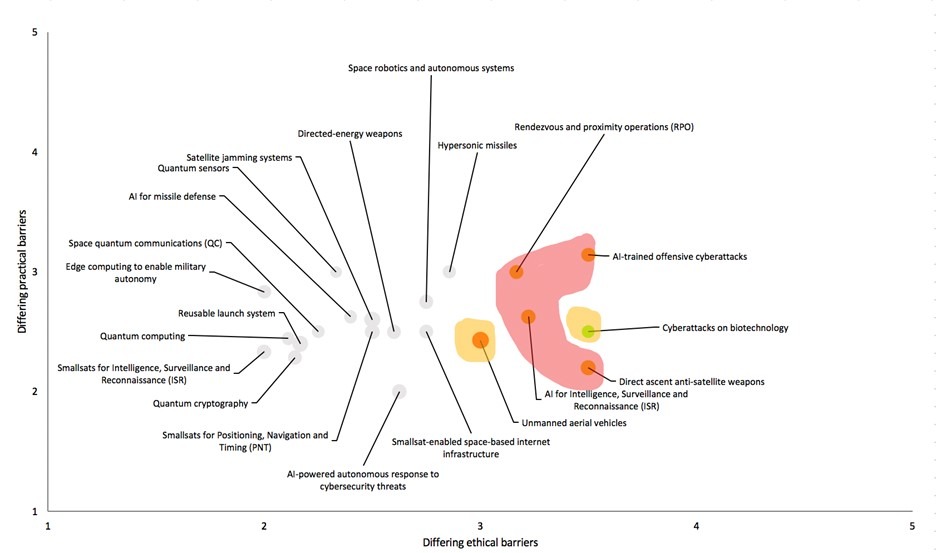

Figure 1 combines the current technological maturity with the rate of development, which facilitates a better understanding of where the technologies are right now and how quickly they might reach maturity (if they are not already at a high level of maturity). The rate of development for each technology is deduced from the extent to which barriers to implementation differ for the UK and its allies versus for the UK’s adversaries. A higher degree of dissimilarity would translate into a higher likelihood of asymmetric capabilities in the medium to long term.

The technologies highlighted in red in Figure 1 are at a moderate maturity level, with lower ethical barriers for the UK’s adversaries—relative to the UK and its allies—and have around the same practical barriers for both parties. These include rendezvous and proximity operations; AI-trained offensive cyberattacks; AI for intelligence, surveillance and reconnaissance; and direct ascent anti-satellite weapons.

The technologies highlighted in amber in Figure 1, meanwhile, paint two distinct pictures. On the one hand, cyberattacks on biotechnology is technologically immature, but it has the greatest disparity in ethical barriers between the UK and its allies versus the UK’s adversaries, meaning that its development could happen quite quickly. For this reason, it is potentially destabilizing. On the other hand, unmanned aerial vehicles are very mature technologically, but the lower ethical barriers for the UK’s adversaries relative to the UK and its allies might mean that the UK’s adversaries are less constrained in their deployment of this technology. Thus, in combining technological maturity and rate of development, we can see that these technologies constitute different kinds of threats to strategic stability.

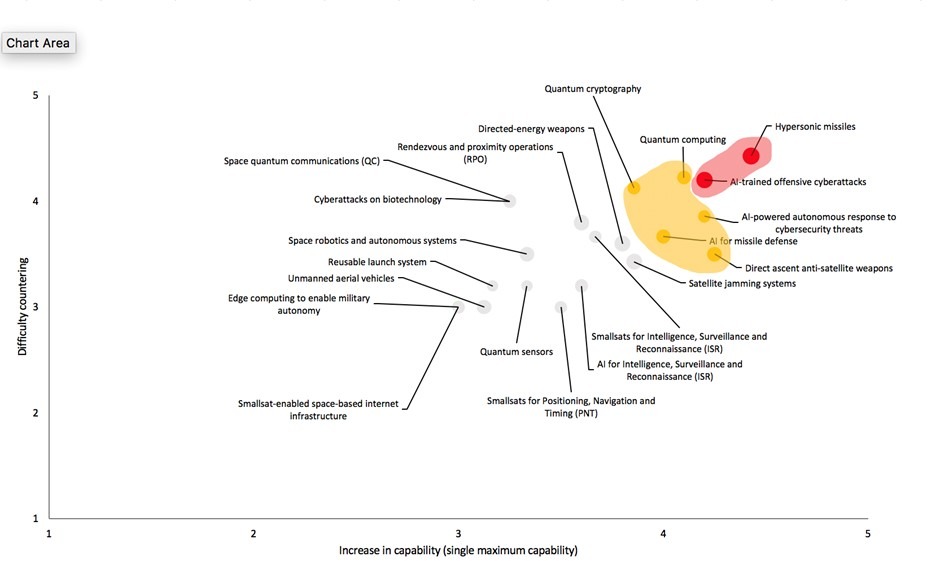

Figure 2 combines capability increase, ease of countering, and crisis instability. Combining capability increase and ease of countering illustrates how technologies that could significantly increase capabilities (defined as the single largest increase in offensive capability, defensive capability, or ability to reduce or enhance the reliable functioning of C4I—command, control, communications, computers, and intelligence) and are also difficult to counter could pose a significant threat to strategic stability. Furthermore, the addition of the crisis stability variable indicates which technologies have the highest potential to trigger an escalatory response, possibly even one that results in a nuclear first strike.

The technologies highlighted in red in Figure 2 (hypersonic missiles and AI-trained offensive cyberattacks) have the potential to significantly increase capabilities, are difficult to counter, and are highly likely to accelerate crisis instability. On the other hand, the technologies highlighted in amber (quantum computing, quantum cryptography, AI-powered autonomous response to cybersecurity threats, AI for missile defense, and direct ascent anti-satellite weapons) will have a similar impact on capabilities and are similarly difficult to counter, but they are slightly less likely to accelerate crisis instability.

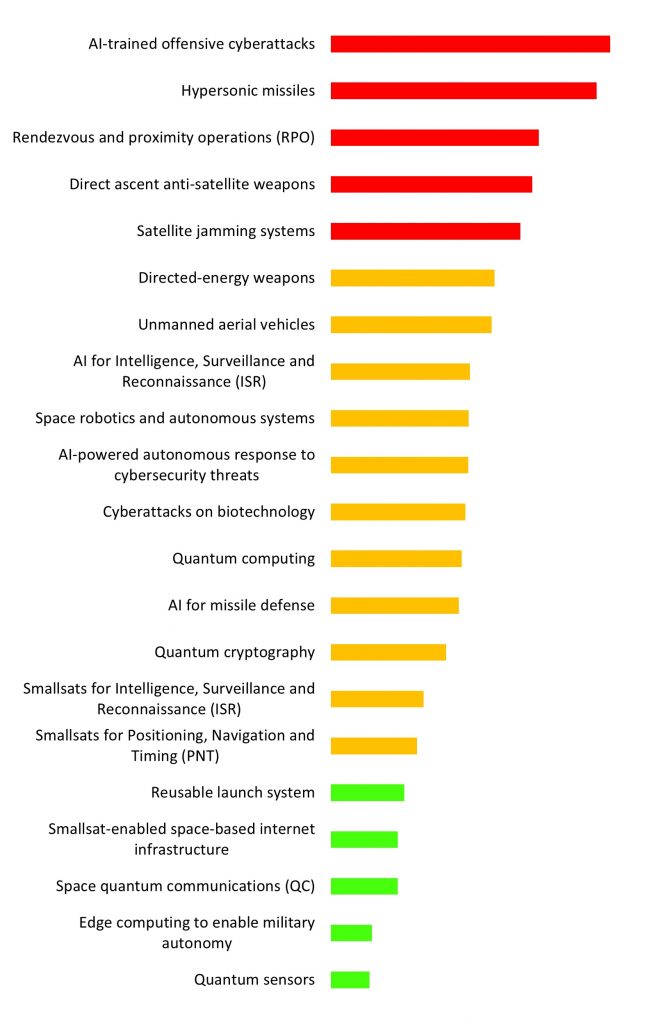

This study used a simple addition method in order to pull all the quantitative results together. A more advanced model (such as one that uses machine learning to cluster technologies) could capture these results in a more nuanced way and include more factors (such as the likelihood of the technology being produced in the private sector, for example, or the ability to attribute the use of this technology to a specific actor). Notwithstanding the various ways that this study could be further developed, this independent study was intended to be a proof of concept that would form the foundation for future research.

Figure 3 shows how the addition method identified the five technologies with the highest potential to disrupt strategic stability. These are: AI-trained offensive cyberattacks, hypersonic missiles, rendezvous and proximity operations, direct ascent anti-satellite weapons, and satellite jamming systems. The caveat for these findings is that the five aforementioned metrics (current technological maturity, rate of development, capability increase, ease of countering, and crisis instability) combine to produce a specific model of strategic stability that is not universal. Using the same data set, but different metrics for determining whether a given technology will impact strategic stability within the next ten years, could yield a different ranking altogether. This should not be seen as a shortcoming of the research, but rather, as an intentional characteristic.

In using these five metrics, the research is not too heavily reliant on any single definition of strategic stability, which is a contested concept—Russia and China have their own definitions, for example, which differ from ours—and one that is used without clear meaning. As a result, the data set generated by this research can be arranged in different ways to reflect different definitions for strategic stability. The intent here is establishing a model within which the appropriate metrics can be identified and weighted with greater precision and awareness of the context.

Controlling for Bias

Another important caveat of this research is that just because a method is systematic does not mean that it is necessarily free of bias. For example, research participants of a specific background might respond differently to the STREAM scoring exercise. However, measures were taken to minimize bias to the greatest extent possible. When shortlisting technologies, I used a literature review and key informant interviews with a different group of experts from those who responded to the STREAM scoring exercise. In the scoring exercise, experts were asked about their certainty levels and to provide sources to justify their responses.

In spite of this, one of the major qualitative findings was that even experts are susceptible to hype in their assessments. This is evident when examining the scores for hypersonic missiles. Experts were given the opportunity to abstain from scoring any technology if they were not familiar with it. However, all experts (n=10) felt that they were in a position to assess hypersonic missiles—thereby indicating some literacy with it—which might stem from the fact that hypersonic missiles are arguably the most widely covered and publicized technology included in this study. It is possible that a cohort of missile technologists might be more skeptical of whether hypersonic missiles really are a game-changing technology (i.e., how different they are from ICBMs).

One of the ways that this research can be improved in future iterations is conducting this study with a larger expert group and including significance testing and other measures of uncertainty.

Although there are some limitations that will be addressed in future iterations of this project, it accomplished its objectives as a proof-of-concept study. Presenting this research at the UK Project on Nuclear Issues conference, hosted by the Royal United Services Institute, and at the NATO Early-Career Nuclear Strategists Workshop sparked interest in a novel method and enthusiasm for a new way of approaching a contemporary issue in the nuclear weapons policy community. Furthermore, STREAM is representative of a wider genre of foresight methods, which are as much about the process as they are about the outcome. Foresight methods are stakeholder driven and both the quantitative scoring exercise and the qualitative exercise debrief present opportunities for research participants to think critically and in a structured manner about these emerging technologies.

Full conference papers are forthcoming, and the next phase of this work will be undertaken by the author under the auspices of the Centre for Science and Security Studies at King’s College London. Ultimately, if the model can offer a means of measuring the impacts of distinct technologies across two domains—of comparing apples and oranges—then policymakers can be equipped to make wiser strategic decisions.

Editor’s note: RUSI has published one of the two conference papers referenced in this article. You can read the paper here.

Marina Favaro is a policy analyst at the British American Security information Council, where she manages the “Emerging Technology” research program, and consultant at King’s College London’s Centre for Science and Security Studies. Her areas of focus include the future of warfare and the impact of new technologies on arms control. Previously, Marina worked as an analyst at RAND Europe, where her research focused on space governance, cybersecurity, defence innovation, and the impact of emerging technologies on society. Marina conducts both qualitative and quantitative research through a variety of methods, including futures and foresight methods (e.g. horizon scanning, STREAM and scenario building). Marina holds a first-class master’s degree in international relations and politics from the University of Cambridge. She sits on the British Pugwash Executive Committee, which contributes to scientific, evidence-based policymaking and promotes international dialogue across divides.

The views expressed are those of the author and do not reflect the official position of the United States Military Academy, Department of the Army, or Department of Defense.

Image: Artist’s rendition of one of two variants of the Hypersonic Air-breathing Weapon Concept. DARPA and the US Air Force recently successfully completed captive carry tests of both variants. (Credit: DARPA)